- AI chatbott and video use a large amount of energy and water

- A five-second AI video uses more energy as a microwave lasting an hour or more.

- The use of data center energy since 2017 has doubled, and AI will be responsible for half OT by 2028

A microwave takes only a few minutes to explode an potato that you have not ventilated, but it takes more energy to create a five-second video of a potato explosion for more than a dozen potato explosions for an AI model to run that microwave for more than an hour.

New one Study It has been determined from the MIT technology review itself how AI models are hungry for energy. A basic chatbot reply can use less as 114 or 6,700 joules between half second and eight seconds in a standard microwave, but it is when things get a multimodal that energy microwaves or 3.4 million jugs cost skyroakers for one hour.

It is not a new revelation that AI is energy-intensive, but the work of MIT describes mathematics in Stark words. Researchers stated what can be a specific session with AI Chatbot, where you ask 15 questions, request 10 AI-borne images, and throw three different five-seconds in requests.

You can see a realistic fantasy film scene that appears to be filmed in your backyard, a minute after asking for you, but you will not notice the huge amounts of electricity you demanded to produce it. You have requested about 2.9 kW-hour, or three and a half hours microwave time.

What is the cost of AI, how painless it seems from the user point of view. You are not budgeting AI messages like we all did 20 years ago with our text messages.

Ayer Energy Rithink

Certainly, you are not mining bitcoins, and your video has at least some real world value, but when it comes to moral energy use it is actually less frequent. The increase in energy demand from data centers is also increasing at a ridiculous pace.

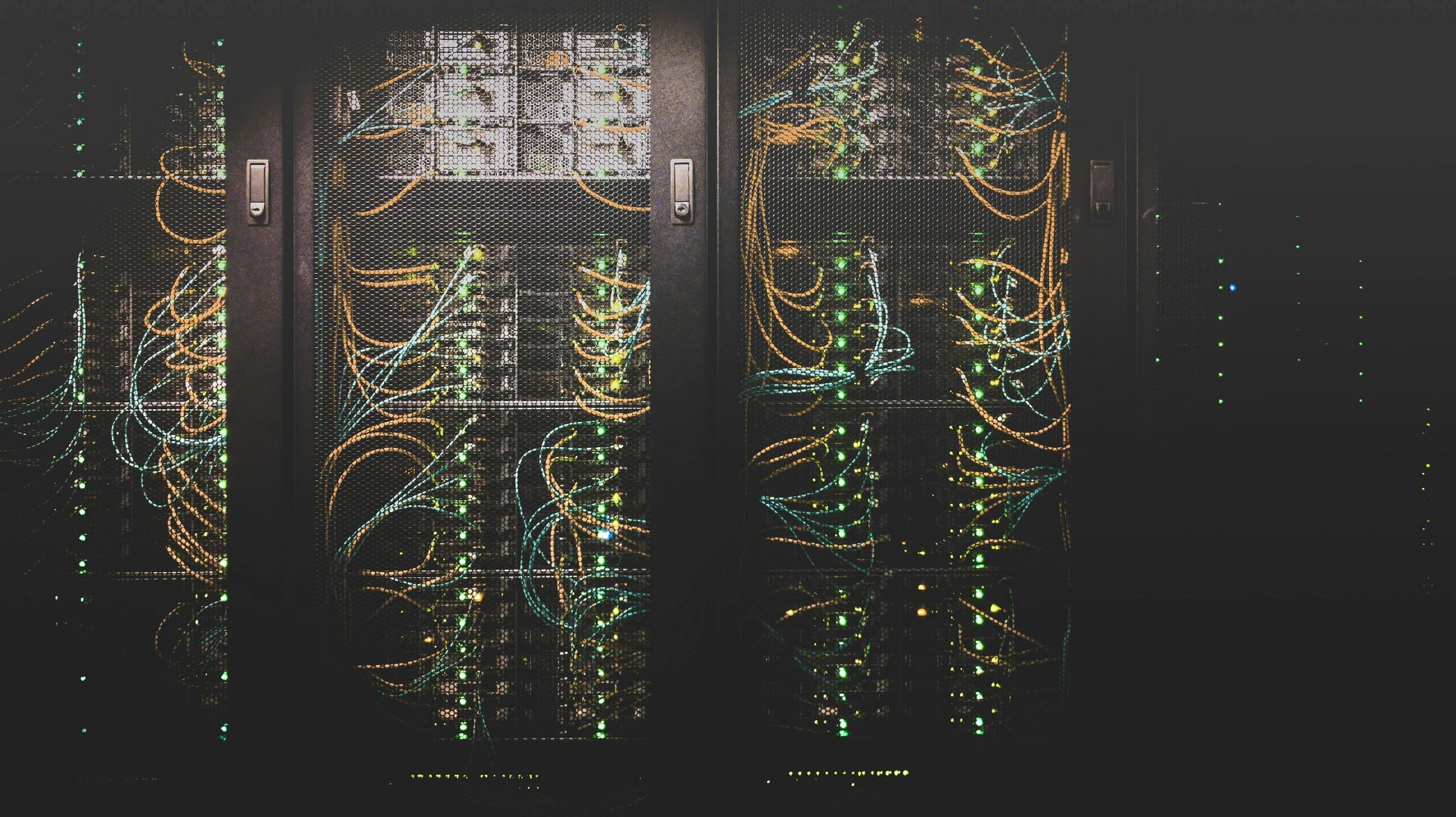

Data centers had recently said in their energy use before the AI explosion, thanks to the proficiency benefit. However, the energy consumed by the data centers has doubled since 2017, and about half of it will be for AI by 2028, according to the report.

This is not a crime journey, by the way. I can claim professional demands for the use of some of my AIs, but I have also worked to help it with all kinds of entertaining fun and with personal tasks. I will write an apology note to the people working in the data center, but I will need AI to translate it for the language spoken in some data center locations. And I do not want to warm, or at least not as hot as those servers get. Some of the largest data centers use millions of gallons of water daily to stay frosty.

The developers behind the AI Infrastructure understand what is happening. Some cleaner are trying to source energy options. Microsoft wants to make a deal with nuclear power plants. AI may or may not be integral to our future, but I will prefer it if that future expansion is not full of cords and boiling rivers.

At the individual level, your use or avoidance of AI will not make much difference, but encouraging better energy solutions from data center owners. The most optimistic results are developing more energy-skilled chips, better cooling systems and greenery energy sources. And maybe the carbon footprint of AI should be discussed like any other energy infrastructure, such as transport or food systems. If we are ready to debate the stability of almond milk, we can definitely leave an idea for 3.4 million joules, which takes a five-second video of dancing cartoon almonds.

Tools such as chipp, Gemini, and Cloud become more clever, sharp and more embedded in our lives, the pressure on the energy infrastructure will only increase. If that growth is done without a plan, we are trying to cool a supercomputer with a paper fan while chewing on a raw potato.