Summary

-

Continuous idea machine (CTM) model incorporates time in nervous network for more human problems.

-

CTMS LLMS and humans such as adaptability such as AI learning models bridge, but require more resources.

-

The CTM model faces challenges such as long -term training time, slow speed and low accuracy as compared to current models.

Are AI models “Think”? This is an important question, because to use something like chat or cloud, it is sure Looks like Like Bot is thinking. Even we have very few signs that say “thinking” and these days you can read the “chain of thought” of a bot, to see how it argues in its own way for a conclusion.

However, the truth is that while LLM and other AI models mimic certain aspects of thinking, it is still not the same as the working natural brain. However, in new research Constant thought machines (CTMS) can change it.

What is CTM?

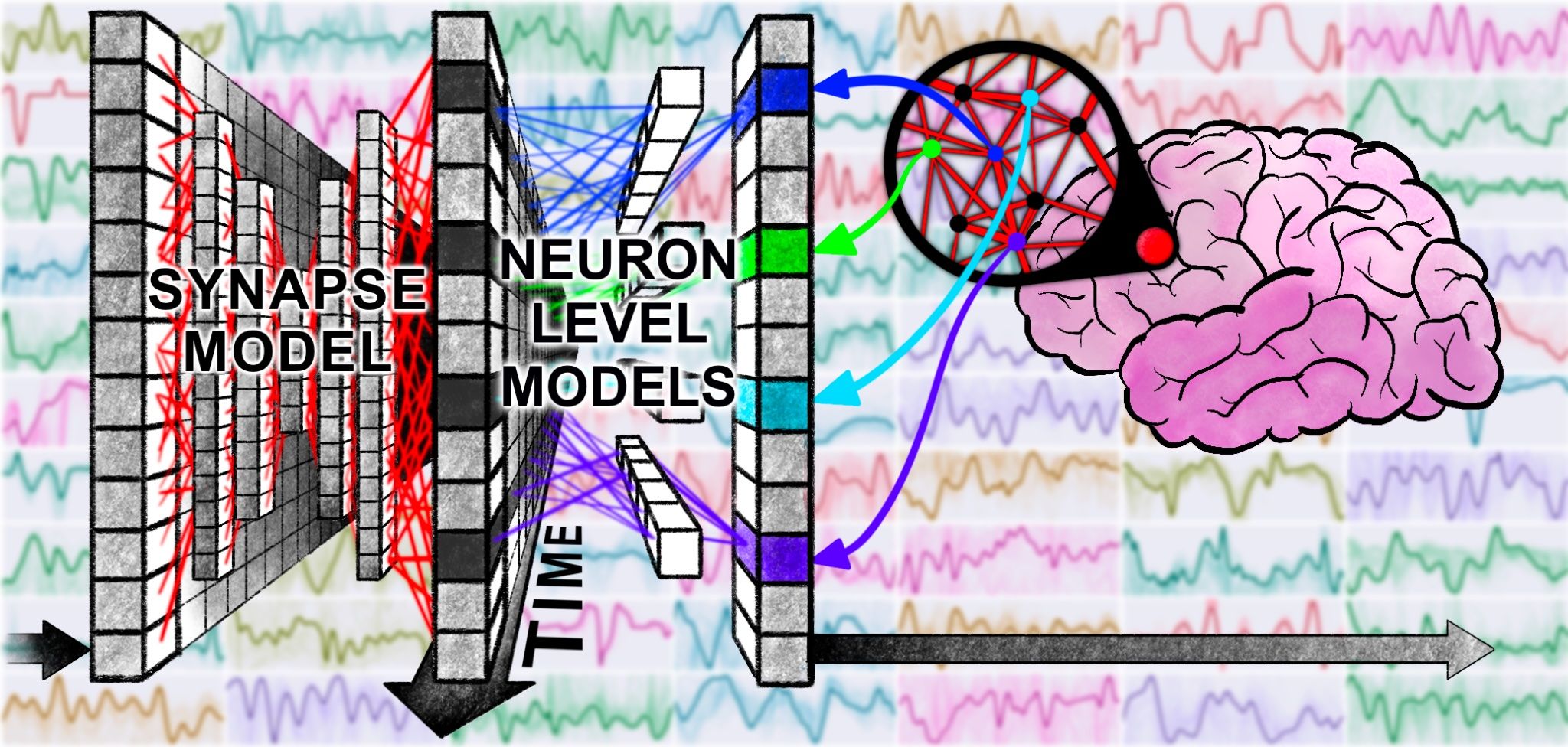

A continuous idea machine (CTM) is a new type of neural network that literally incorporates time in its thinking. Instead of a normal one-shot calculation, each CTM neuron keeps its previous activity track and uses the history to decide what to do next. In May 2025, Sakana AI expands the CTM model Research paper And one blog post.

Sakana claims that it is a new type of artificial nervous network that mimics more closely how natural brains work. Neurons in a CTM do not fire and are done only once; They have a small “memory” and they can sync their firing patterns with other neurons. The internal state of the network is defined by these patterns of Sinkoni over time.

It looks like intelligence in biological brain that leads to the waves of the brain. This makes CTMs very different from standard deep mks or transformers. For example, a specific transformer-based model processes a fixed number of text (or one image) in a certain number of texts in a fixed number. Originally, it thinks in a small defined burst, and then the brain becomes-toe as it waits for your next signal.

Connected

AI is to stay here, so update yourself with these 7AI terms

Know what the quiet children mean by “LLMS”.

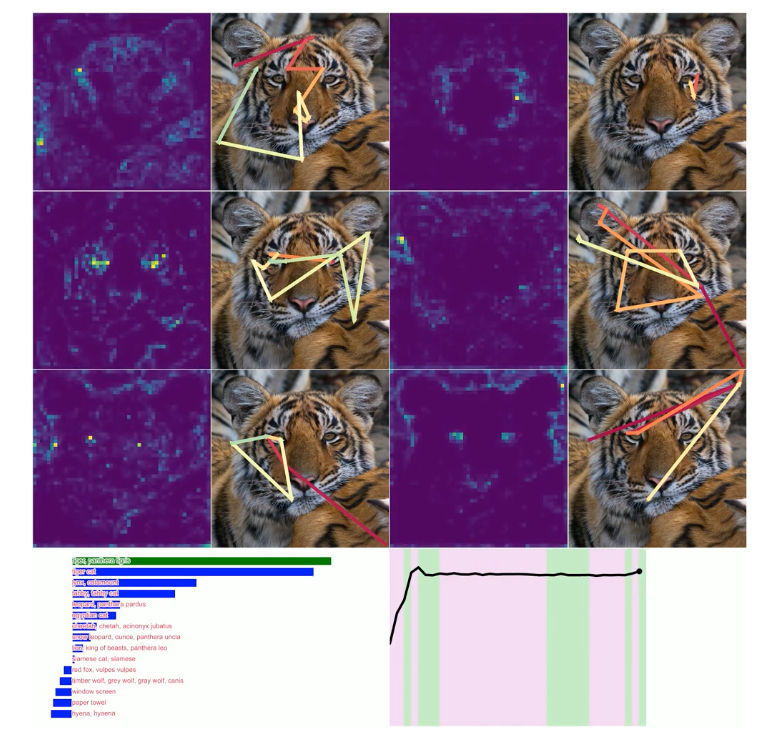

The implications here are deep. If I also understand, what does it mean – and it is very possible that I do not! This quotation of the post in relation to solving mazes and gazing on photos is really hit me:

Remandable, despite not being clearly designed to do so, this solution that learns on the maz is very interpretive and human-like, where we can see it tracing the path through the labyrinth because it ‘thinks’ about the solution. For actual images, there is no clear encouragement to look around, but it does so in a spontaneous way.

In addition, it presents it very well:

We call the resulting AI model The Continuous Thought Machine (CTM), which is a model capable of using this new time dimensions, rich neuron dynamics and synchronization information that is planning to ‘think’ about a task and before answering it. We use the word ‘continuous’ in the name because CTMs are completely operated in an internal ‘thinking dimension’ when arguments. It is persistent about the consumption of that data: it can cause stable data (eg, images) or sequential data in a uniform fashion. We tested this new model on a wide range of tasks and found that it is capable of solving diverse problems and often in a very explanatory way.

Unless we see more and independent parties benchmarks, I do not want to overheip this idea, but whatever I am reading, some of them opt away in every way under the scope of underdeveloped consciousness.

Connected

What is the difference between strong AI and weak AI?

We do not yet need to welcome our robot overlords.

Why is this better than the current nervous network?

The entire concept of CTM originally relieves the idea of ”a-shotting” problems, often seen as a gold standard with the AI model, where you need to get the correct answer most of the time within a certain window, it has to push the problem through your transformer network-that is the type of nervous network that is, for example, the type of poverty chats.

This is one of the reasons that the current LLM does not have a good way to really correct the relatively small percentage, when they find things wrong. On the chain-off-which, there have been improvements with some bounce between the two models until self-exist, and better, but it seems that the CTM approach may bridge a significant difference in accuracy and reliability.

Depending on the outline of the promise of promising in your papers, this means that the strength of models such as LLM is combined with the adaptability and development of the biological brain. I also feel that it is implications for robotics, and helps the embodied machines to learn, grow and be present in the physical world as we do.

Connected

AI What is hallucinations? What chat can chat?

AI chatbott can make hallucinations. What does it mean to you here?

Overwithinking Downsides

Constant thinking is powerful, but it comes with business. First of all, CTMs are more complex and resource-bungling than plain feedform networks. Allowing each neuron to carry its history increases the internal state of the network extensively. In practice, a CTM training may demand too much calculation power and memory. They may take longer to train and may require more data or recurrence for convergence. If the model chooses several thinking stages for a hard input, the difference may also slow down.

In addition, all current equipment and libraries are around the stable models for clear reasons, not CTMS. So if there is actually something in it, it will take some time to catch the equipment.

The second major problem is very clear – when it should stop thinking? “Runway” is a risk of thinking where CTM rotates in just one circle. So there will be some very sophisticated rules to help find out when it is done. If you do not, you can get error amplification and the same kind of hallucinations that we already have because the model is far from the ground truth that started with it.

The final main issue, and it is a biggi, it is that this early CTM model is still far from matching the best current transformer model for accuracy. According to a report by Venturebeat It is currently falling well on the accuracy benchmark.

Connected

What is LLM? How AI talks

LLMS is an incredibly exciting technique, but how do they work?

Our minds remain a secret

While I have seen about CTMS so far based on Sakana AI papers Very As we see in the human mind, the truth is that we still do not really know how our own minds work. It may be that AI researchers have stumbled on the same solution as natural selection for us and other animal species, but it may also be that we have created something on a parallel track that may eventually be equally capable.

I have felt that the current models are only part of the more generalized AI puzzles for some time, such as the LLM is more like the language center of the brain than the whole thing, and at first glance CTMs look like another part of the puzzle for me. So I will follow Sakana’s progress with great interest.