Have you recently met a cute diamond logo over some magical words? AI observation of Google connects the language model of Google Gemini (which generates reactions) with a recovery-oriented generation, which draws relevant information.

In theory, it is designed to use an incredible product, Google’s search engine, and even easy and rapid use.

However, because the construction of these summings is a two-step process, when there is a disconnect between recovering and language generations, issues can arise.

While the recovered information may be accurate, AI can make the wrong jump and make a strange conclusion when generating summary.

This is led to some famous guffs, such as when it became a stock of internet laughing in mid -2024, to ensure that the cheese would not slide from your homemade pizza. And we loved from the time that was running with scissors “a cardio exercise that could improve your heart rate and require concentration and attention”.

He was inspired to publish an article by Liz Reid, the head of Google Search, which was titled About last weekStating these examples, “some specific areas were highlighted, which we needed to improve”. More than that, he diplomatically blamed “fruitless questions” and “satirical material”.

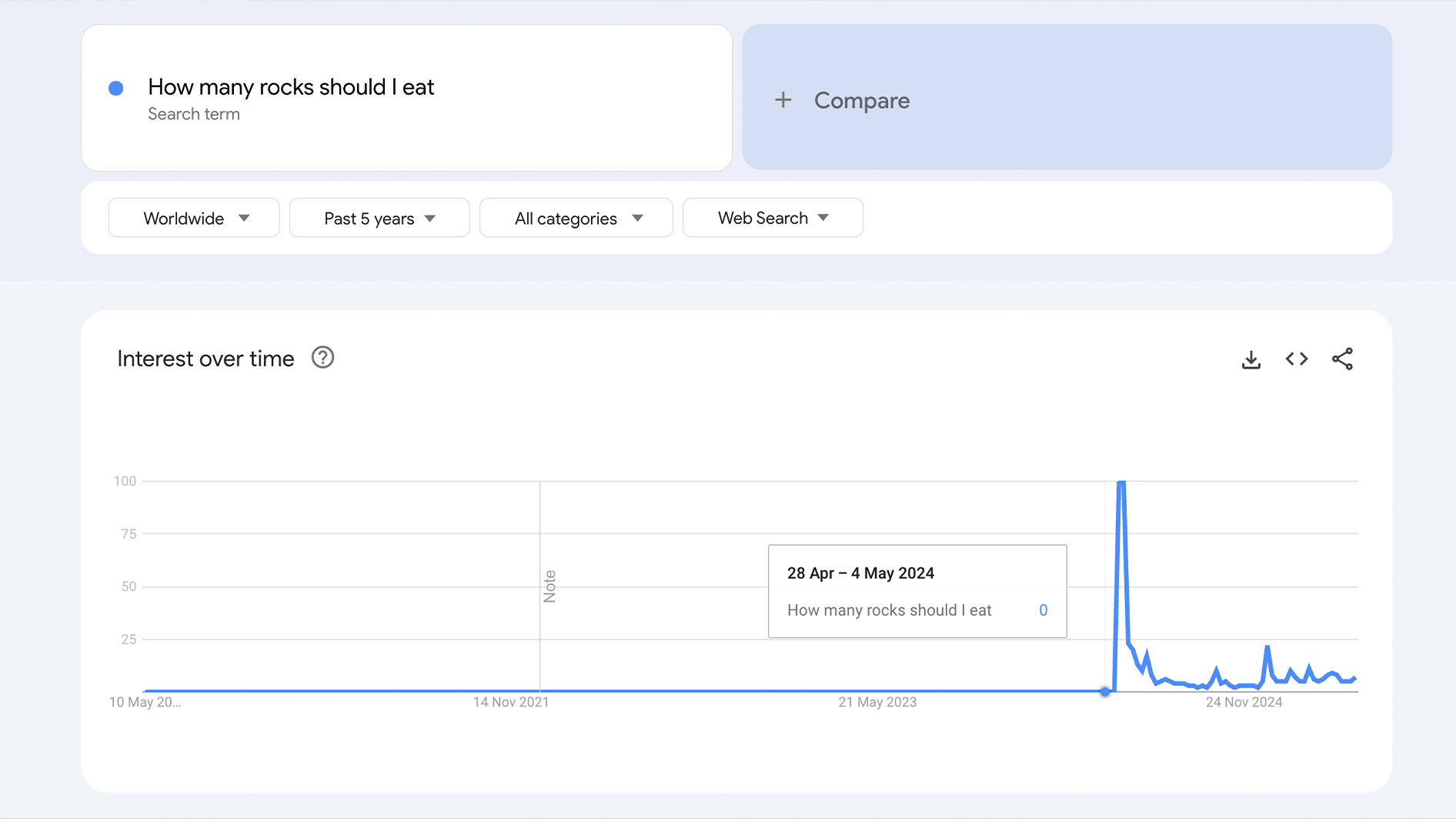

She was at least partially correct. Some problematic questions were exposed to the interests of purely fooling AI. As you can see below, Query “How many rocks I should eat?” The AI observation was not a general discovery before the onset of observation, and it has not been since.

However, about a year from Pizza-Gail Fiysco, people are still dodging Google’s AI interviews or “hallucinations”-AI lies.

Many misleading questions are ignored as writing, but it was last month Reported by Engadget That the AI overview will clarify for idioms that pretend like “you can’t marry pizza” or “ever rubbing the laptop of Baiceet Hound”.

Therefore, AI is often wrong when you deliberately cheat it. Big thing. But, now that it is being used by Arabs and involves congestion-creating medical advice, what happens when a real question it causes hallucinations?

While AI works surprisingly, if everyone uses it, it checks where it is from its information, from many people – if most people are not – then not going to do so.

And it has a significant problem. As a writer, the overview is already a bit annoying naturally because I want to read human-written materials. But, even keeping my supporter human bias aside, AI becomes seriously problematic if it is so easily incredible. And it is definitely dangerous now that it is basically omnipresent when searching, and a certain part of users is going to take its information at an inscribed price.

I mean the years of search have trained all of us to rely on the results at the top of the page.

Wait … is it true?

Like many people, I can sometimes fight with change. I did not like it when Labron went to Laks and I got stuck for a very long time with an MP3 player on an iPod.

However, given that I am watching for the first time on Google for the first time, it is a bit difficult to ignore Google’s AI overview.

I have tried to use it like Wikipedia – potentially incredible, but forgotten to remind me of the information or learn the basics of a subject that will not cause me to be the cause of any execution if it is not 100% accurate.

Nevertheless, it seems that even on simple questions it may fail brilliantly. As an example, I was watching the second week and this man watching a film In fact Lynn-Manuel Miranda Haemilton), So I said whether he had a brother.

AI observation informed me that “Yes, Lin-Manuel Miranda has two younger brothers, named Sabstian and Francisco.”

For a few minutes I felt that I am a talented to recognize people … until a little more research showed that Sebastian and Francisco actually have two children.

To give it the benefit of doubt, I felt that there would be no issue of listing quotes from Star Wars to help me think about a title.

Fortunately, it gave me what I wanted. “hello!” And also quoted “this is a trap!”, And also quoted “not, I am your father”, as very usually repeated “Luke, I am your father”.

With these legitimate quotes, however, it claimed that Anakin said that “if I go, I go with a bang” before their change in Darth Watter.

I was surprised by how it could be wrong … and then I started to guess myself another. I strangle myself thinking that I should be wrong. I was so uncertain that I checked the existence of quote to Triple And It shared it with the office – where it was quickly rejected as another match of AI Lunasi (and correctly).

This small piece of self-doubt scared me about something silly as star wars. What if I have no information about a topic that I was asking about?

this study SE ranking actually shows that Google’s AI avoids overview (or carefully reacts carefully to the subjects of finance, politics, health and law. This means Google Detect This AI is not yet for the function of more serious questions.

But what happens when Google feels that it is better to the point that it can do?

This is technology … but also how we use it

If all those using Google may be trusted to re -examine the AI results, or click on the source link provided by observation, then it will not have any problem.

But, until an easy option is – a more friction -free path – people do it to take it.

Despite having more knowledge on our fingers than in any previous time in human history, in many countries Literacy and numerical skills are decreasingIn this case, a 2022 study found that bus 48.5% Americans report has been read least One Book in the last 12 months,

This is not only technology. As is eloquence Argued by Associate Professor Grant BlashkiHow we use technology (and in fact, how we have moved towards using it) where problems arise.

For example, A Observation study by researchers at McGil University, Canada It was found that regular use of GPS could lead to deteriorating spatial memory – and inability to navigate on its own. I could not only be one that Google Map is used to get somewhere and did not know how to get back.

Neurosciences have clearly demonstrated that conflict is good For the brain. Cognitive weight theory Explains that your brain needs Thinking About things to learn. When you find a question, it is difficult to imagine too much struggle, read the AI summary and then call it one day.

Make a choice of thinking

I am not committed to using GPS again, but regularly unreliable given Google’s AI overview, if I can get rid of AI interview if I can. However, unfortunately there is no such method for now.

Even adding a CUSS word to your query like hacks no longer work. (And when using F-word still works most of the time, it also makes it for weird and more, uh, ‘adult-oriented’ search results that you probably are not seeing.)

Of course, I will still use Google – because it is Google. It is never going to reverse its AI ambitions soon, and when I can wish for it to restore the option of the AI overview, it is probably a better devil you know.

Right now, AI is the only true defense against misinformation to make a concrete effort that does not use it. Let it take notes from your work meetings or think of some pick-up lines, but when it comes to using it as a source of information, I am scrolling it last and looking for a quality human writer (or minimum test) article from the top results that I have done almost for my entire existence.

I mentioned earlier that one day these AI devices can actually become a reliable source of information. They can also be enough smart to take politics. But today is not the day.

In fact, as Reported on 5 May by New York TimesAs the AI devices of Google and Chatgpt become more powerful, they are also rapidly becoming incredible – so I am not sure that I will ever trust them to summarize the policies of any political candidate.

When testing the halight rate of these ‘logic systems’, the highest recorded halight rate was 79%. Amr Awadalla, the Chief Executive of the Vctara – AI agent and the auxiliary platform for enterprises – placed it clearly: “Despite our best efforts, they will always be hallucinations.”