- Liquid cooling is no longer optional, this is the only way to avoid AI thermal attack

- Jump for 400VDC takes heavy borrowing from electric vehicle supply chain and design logic

- Google’s TPU supercomputers now run on a gigawat scale with 99.999% uptime

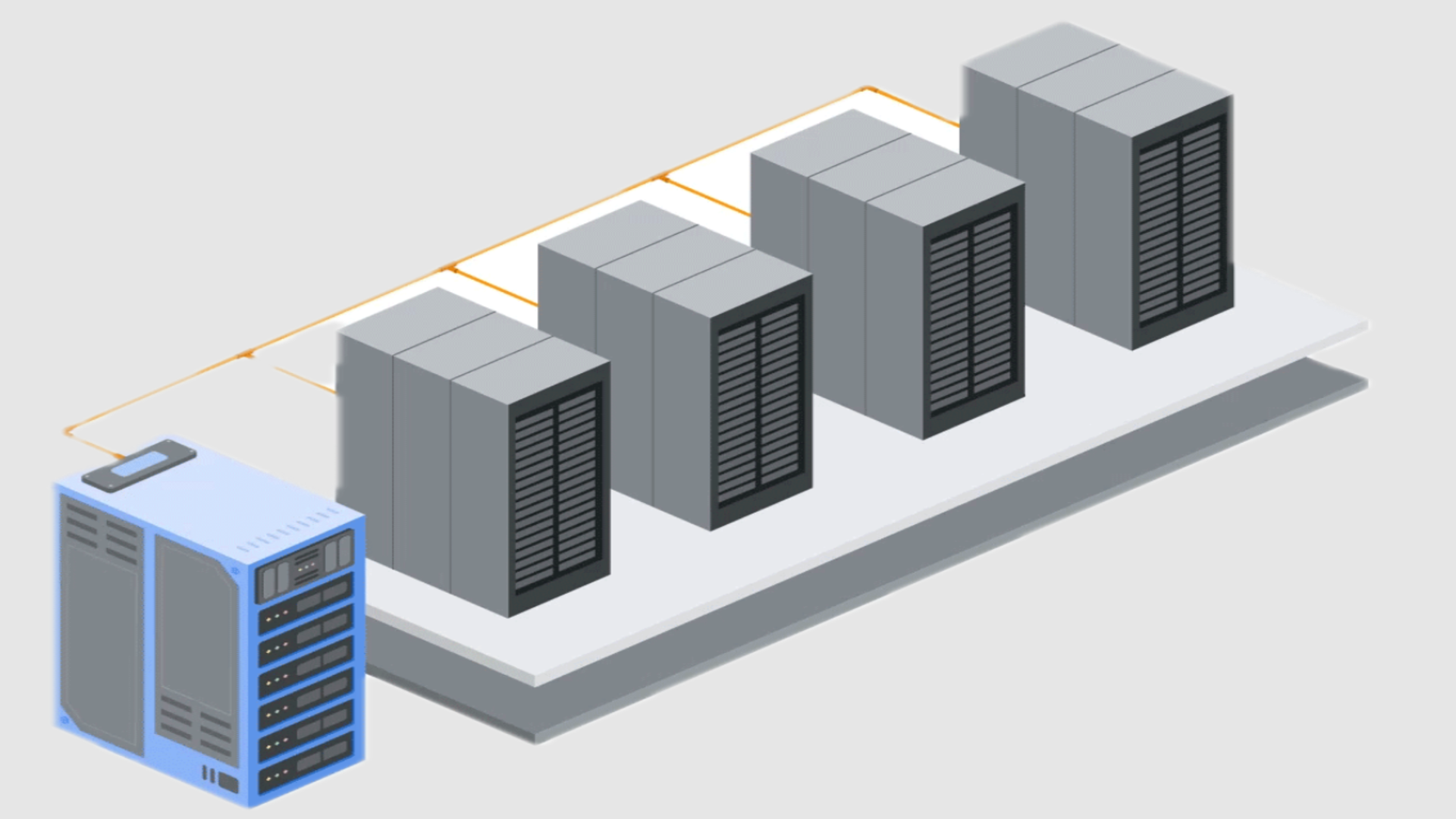

As the demand for artificial intelligence workload intensifies, the physical infrastructure of data centers is rapid and radical changes.

The choice of Google, Microsoft and Meta is now drawing on techniques developed early for 400VDC systems, especially to solve the dual challenges of high -density power distribution and thermal management.

The emerging vision is of the data center rack, which is capable of providing electricity to 1 MW, which is designed with engineer liquid cooling systems to manage the resulting heat.

Borrow EV technology for data center evolution

400VDC marks a decisive brake from the shift heritage systems for power distribution. Google first made the industry step champion from 48VDC to 48VDC, but the current transition to +/- 400VDC is being enabled by EV supply chains and is inspired by the requirement.

The Mount Diablo initiative supported by the Meta, Microsoft and Open Commit Project (OCP) is to standardize the interface at this voltage level.

Google says that this architecture is a practical step that frees the valuable rack space for compute resources by decouling power delivery from IT racks through AC-to-DC sidecar units. It also improves efficiency up to about 3%.

Cooling, however, has become an equally pressure issue. Traditional air cooling is becoming increasingly obsolete, with consumption above 1,000 watts with the next generation chips.

The liquid cooling for heat management in the high density calculation environment has emerged as the only scalable solution.

Google has adopted this approach with full scale deployment; Its liquid-cooled TPU pods now work on the Gigawatt and have distributed 99.99% uptime in the last seven years.

These systems have replaced large heatcrinks with compact cold plates, which effectively close the physical footprint of server hardware and quadruple density compared to previous generations.

Nevertheless, despite these technical achievements, skepticism is warrant. The push towards 1MW rack is based on the notion of increasing demand, a trend that may not be as expected.

While the roadmap of Google highlights the increasing power needs of AI – to project more than 500 kW per rack by 2030 – it is uncertain whether these estimates will be in broad markets.

It is also worth noting that the integration of EV-related technologies in data centers not only brings proficiency benefits, but also new complications, especially related to security and serviceability at high voltage.

However, cooperation between hypersscalers and the open hardware community indicates a shared recognition that the current paradigms are no longer enough.

Through StoragereView